Two-Dimensional Convolutional Neural Networks

Overview

This study explored the effectiveness of two-dimensional convolutional neural networks (2D-CNNs) deep learning models for packet-based traffic classification for community networks, both in terms of their computational efficiency as well as their accuracy. We found that 2D-CNNs models attain higher out-of-sample accuracy than both traditional support vector machines (SVM) classifiers and the simpler multi-layer perceptron (MLP) neural networks, given the same computational resource requirements – but that this improvement in accuracy offered by the 2D-CNNs has a tradeoff of slower prediction speed, which weakens their relative suitability for use in real-time. However, we observed that by reducing the size of the input supplied to the 2D-CNNs, we can improve their prediction speed whilst still achieving higher accuracy than the simpler models. As a result, 2D-CNNs are shown to be strong candidates for real-time traffic classification in low-resource community networks.

Project Goals

The main aim of this study was to evaluate the effectiveness of 2D-CNNs for the packet-based classification task, compared to simpler MLP and SVM classifiers. To this end, we aimed to evaluate these models' suitability for the context of community networks, by aiming for the highest accuracy, lowest computational resource requirements, and fastest prediction speed). In order to achieve these aims, our experiments were designed to answer the following research questions:

- How much impact does the use of 2D-CNN deep learning models have on classification accuracy compared to the simpler MLP and SVM models given the same computational requirements?

- Are the classification models fast enough for real-time classification (in terms of the time taken to classify packets) – and how do the 2D-CNN, MLP and SVM models compare in terms of prediction speed (given the same memory and processing power resources)?

- To what extent can reducing the number of bytes used as input features to the model increase the prediction speed of 2D-CNN classifiers, and at what cost to the accuracy?

Architectures

The following model architectures were chosen for comparison over the course of the experiments. For more specific details, please see the final report here.

2D-CNNs

For the 2D-CNN networks, we considered two key architectures — a 'shallow' network with just one convolutional layer, and a 'deep' network with four convolutional layers. This allows us to compare whether the additional complexity associated with a deeper CNN can be justified.MLPs

For our baseline MLP models, against which the 2D-CNNs are compared, we again considered two main architectures - a 'shallow' network with one hidden layer, and a deeper network with three hidden layers. The shallow network provide a benchmark to allow us to evaluate the performance benefit offered by deep learning compared to 'shallow' learning.SVM

Lastly, an SVM model is considered as a baseline model to which to compare the neural networks. Since the SVM classifier is expected to be lightweight in terms of memory usage, and have a fast classification speed, the neural network architectures need to show superior accuracy to justify their additional complexity.Experiments

Two experiments were used to investigate the research questions.

- Experiment 1 involves comparing the models' accuracy and prediction speed (in packets classified per second) across a varying number of parameters, with reference to the first two research questions.

- Experiment 2 varies the number of bytes of each packet's payload used as model input, and evaluates the effect on the deep 2D-CNN's accuracy and prediction speeds to answer the third research question.

Each model at a particular parameter level was chosen according its accuracy on the validation set using a grid search over the hyperparameter space. For further details regarding the experimental set-up and design, see the full report here.

Results

Accuracy Results

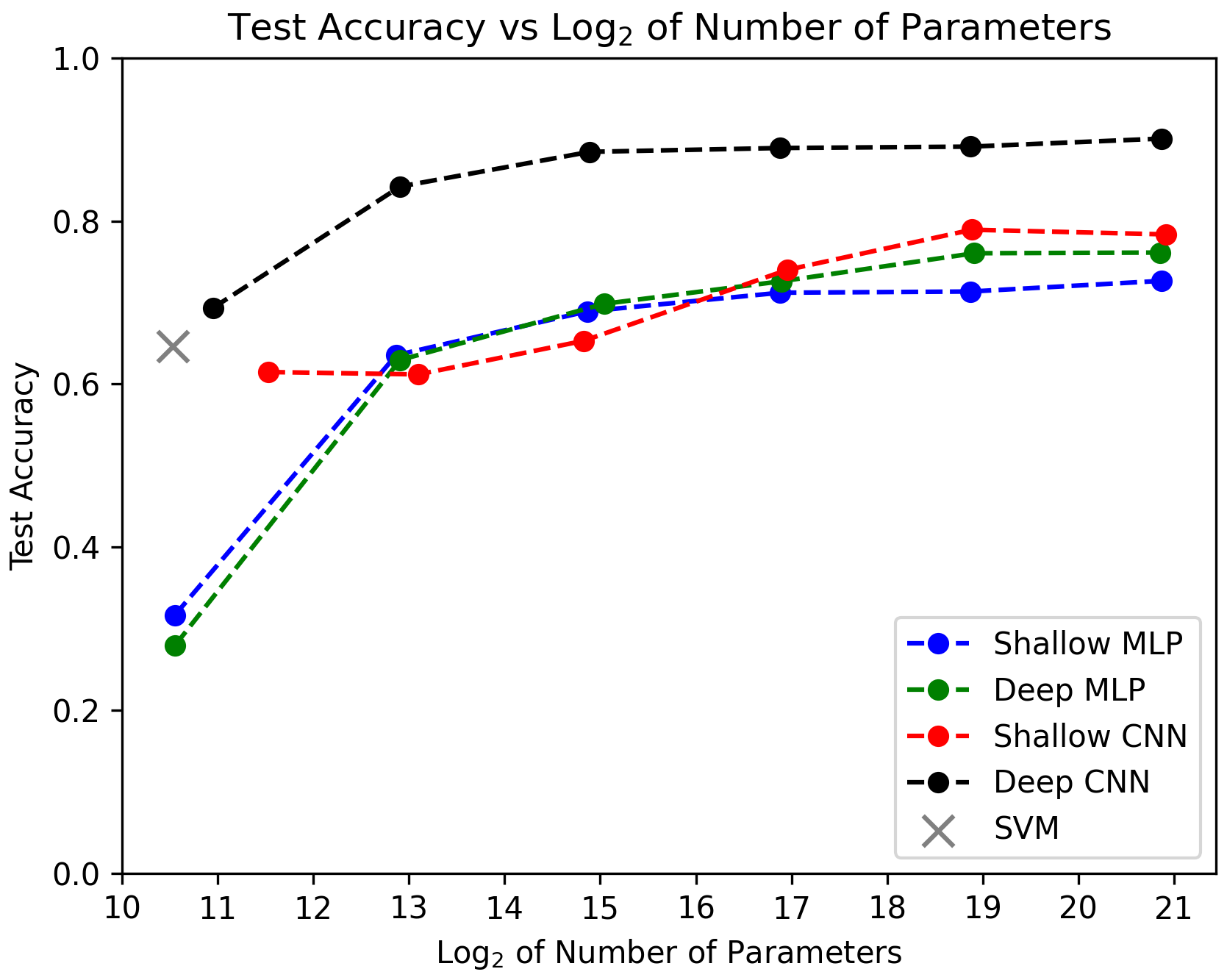

Figure 1 compares the deep 2D-CNN, shallow 2D-CNN, deep MLP, shallow MLP, and SVM models on the basis of their accuracy on the test set containing 24000 packets, for each given number of parameters.

It is clear that test accuracy increases as the number of parameters increases across the models. This matches our intuitive expectations, as increasing the number of parameters improves the flexibility of the models to fit patterns in the data. Notably, however, the rate of increase in test accuracy decreases for each of the models as the number of parameters increases.

Comparing the models' accuracy, we first note that the SVM classifier attained a test accuracy of 64.6%, and, being the smallest model in terms of number of parameters, provides a baseline accuracy to which to compare the other models. Comparing the neural networks, it is immediately apparent that the deep 2D-CNN model performs significantly better on the unseen test data than all of the other models across the range of number of parameters – with the largest deep 2D-CNN attaining a test accuracy of 90.1%. Thus, if we were to decide on the best model based solely on performance in terms of accuracy, we would choose this deep 2D-CNN model with log2 number of parameters of 21. We can also conclude that the use of 2D-CNN deep learning models has a significant impact on attaining higher classification accuracy compared to the simpler MLP and SVM models for a given model size, in answer to our first research question.

The deep 2D-CNN outperforms the shallow 2D-CNN model for any given number of parameters, which shows the benefit that added convolutional layers can offer in terms of fitting more complex patterns in the data. The shallow MLP, deep MLP, and shallow CNN perform relatively similarly across the range of number of parameters. When the number of parameters is sufficiently large, though, we note that the shallow 2D-CNN does outperform the MLP models. This provides evidence that the more sophisticated 2D-CNN models offer better out-of-sample performance than the baseline MLP and SVM models for the traffic classification task given sufficient model complexity. We also observe that the deep MLP model attains a higher test accuracy than the shallow MLP model once the log2 of parameters is larger than 15, thus showing the benefit of 'deep' learning when given sufficient model flexibility.

Prediction Speeed Results

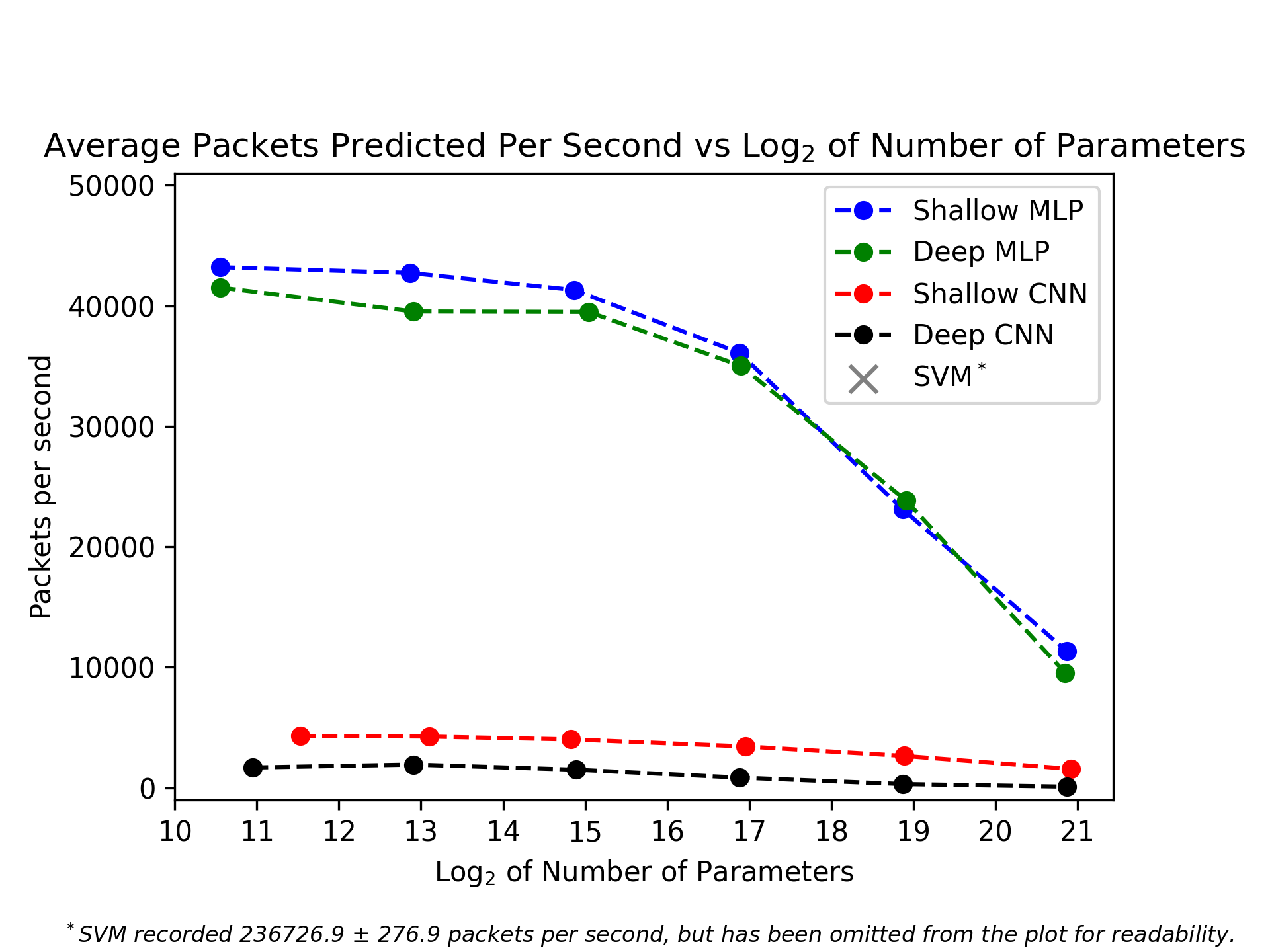

In Figure 2 we compare the prediction speed of the various models in terms of average number of packets per second that can be predicted by each model, for each given model size.

The general trend observed amongst the models is the greater the number of parameters, the fewer average packets per second that can be classified. This matches our expectations, since having more parameters corresponds to larger weight matrices being involved in the matrix multiplications performed when making predictions for the MLPs, in addition to more convolution operations (since additional filters are responsible for increasing the number of parameters in the CNN convolutional layers) when the CNNs make predictions. These consequently result in longer computational time required to make predictions.

The lightweight SVM classifier predicts on average 236726.9 packets per second, which is substantially faster than all other models considered – in fact, this is more than five times more packets than the fastest MLP model considered. However, we are considering 10000 packets per second as an approximate benchmark for a model's ability to process packets in time for real-time classification for a relatively low-resource 10mbps network. Thus the SVM's fast prediction speed is not necessary for our low-resource purposes, but could offer value to a very high capacity network that supports a very large traffic volume.

Comparing the neural networks, we observe that the MLP classifiers are significantly faster in terms of prediction speed than the 2D-CNNs for any given fixed number of parameters. The reason for this observation is that for each filter in a convolutional layer in a CNN (and similarly for the pooling layers), the convolution operation using the filter must be applied to every element of the preceding layer's output. Therefore the parameters for each filter are used repeatedly in multiple computations for a given layer, which is responsible for the high computation time required. This is in contrast to fully-connected layers in an MLP where each layer's weight matrix is only used once in making a prediction for a packet. This observation then also explains why the shallow 2D-CNN attains a faster prediction speed than the deep 2D-CNN for a given number of parameters, due to fewer convolution operations needing to be applied.

The MLP models are relatively similar in terms of prediction speed, with the shallow MLP generally able to predict slightly more packets per second than the deep MLP. This could be attributed to fewer computational overheads involved in performing a large matrix multiplication for the shallow MLP compared to three smaller matrix multiplications for the deep MLP (with three hidden layers) for a fixed number of parameters.

Now, the neural networks' suitability for real-time classification is considered, keeping in mind the benchmark of 10000 packets per second. The deep 2D-CNN, although achieving the highest accuracy amongst the classifiers across the entire range of parameters, is the slowest in terms of prediction speed and does not appear suitable for real-time classification regardless of the model size – the maximum packets per second even amongst the small deep 2D-CNN models is just 1931.4. The results of the next experiment below will, however, explore to what extent this prediction speed can be improved but high accuracy maintained by reducing the size of the input supplied to the 2D-CNNs.

The shallow 2D-CNNs only attained a higher accuracy than the MLPs from log$_2$ parameters of 17 onwards – however, in this range the shallow 2D-CNN recorded a maximum of 3435.2 packets per second, which is again likely too slow to be suitable for real-time classification relative to our benchmark. In contrast, both MLP models seem to be suitable for real-time classification across the range of parameters considered, since on average they are able to predict more than 10000 packets per second.

The Effect of Reducing Input Size

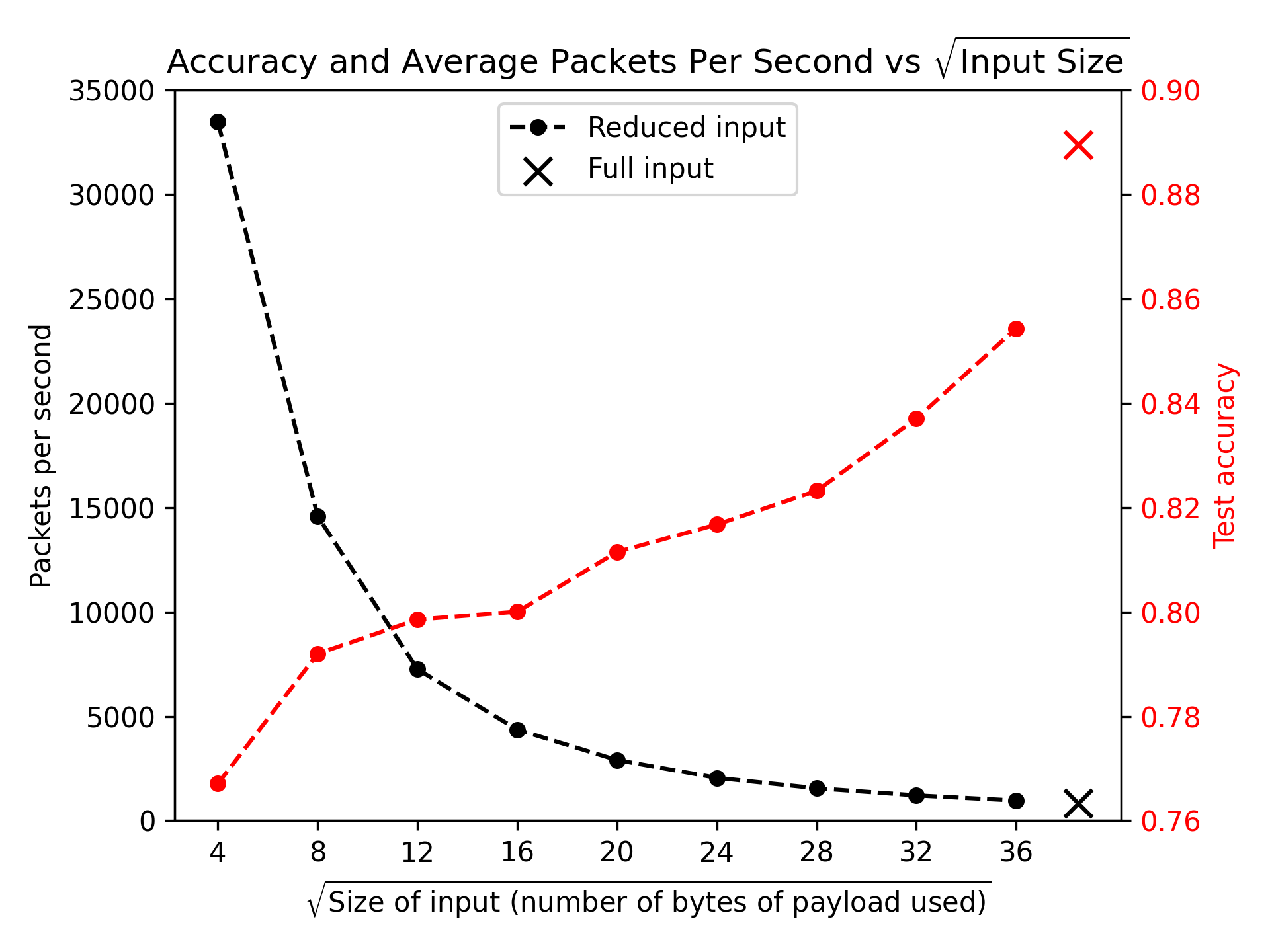

Figure 3 plots the test accuracy and average packets predicted per second for the deep 2D-CNN model across varying input sizes (that is, using only the first n bytes of the packet payload for some n).

The general trends we empirically observe match our expectations. As we reduce the number of bytes used as model input, the test accuracies decrease, as a result of losing the information contained in the latter bytes of the payload. However, reducing the number of input bytes does result in an increasing average number of packets that can be predicted per second (since fewer convolutions need to be performed in the convolutional layers, and fewer pooling operations in the pooling layers) - thus improving the model's suitability for real-time classification.

Now, the key consideration is whether this approach of reducing the input size can enable the 2D-CNN model to be suitable for real-time classification, but still maintain high accuracy. Recall that the deep 2D-CNN model using the full payload with this given model complexity (log2 number of parameters of 17) attained an accuracy of 88.95%, but an average prediction speed of only 851.3 packets per second. This meant that this model was not suitable for real-time classification with reference to our benchmark of 10000 packets per second. However, we notice now that when the root input size is 8 or less (i.e. using only the first 64 bytes or fewer), the average packets per second is greater than 10000, thus meeting our benchmark for real-time classification. The associated test accuracy is 79.2%, which is superior to the MLP and SVM models that were deemed suitable candidates for real-time classification. For faster networks, we could use even fewer input bytes (just the first 16 bytes) to get a faster prediction speed of 33472.0 packets per second, and 76.7% accuracy, which still exceeds the highest observed MLP accuracy.

This shows that, by reducing input size, the 2D-CNN model can be suitable for real-time classification and still outperform the other classification models in terms of accuracy. It is thus clear that reducing the number of bytes can significantly improve the prediction speed of the 2D-CNN without the cost to its accuracy decreasing its predictive performance advantage over the simpler models.

Conclusions

The experiments demonstrated that 2D-CNN models are indeed superior to the baseline model candidates of SVM and MLPs when the basis of comparison is solely classification accuracy, given the same computational resources (in terms of memory allocation, based on the number of parameters). In answer to our first research question, we can thereby conclude that 2D-CNNs do have a significant impact on classification accuracy compared to the simpler models. Notably, the largest 2D-CNN model successfully attained an accuracy of 90.1% on the test set, indicative of excellent out-of-sample performance. We observed that the neural network architectures attained higher test accuracies than the SVM traditional machine learning model, which is evidence of the added predictive power that these deep learning architectures offer over a traditional machine learning approach for traffic classification. The benefits of 'deep' learning over 'shallow' learning were also highlighted by the fact that both the deep CNNs and deep MLPs outperformed the shallow CNNs and shallow MLPs respectively.

Our second research question was set up to investigate whether the classifiers are fast enough for real-time classification, and how the models compare on this basis. To this end, we noted that despite clearly offering the strongest predictive power on out-of-sample data, the 2D-CNNs models were significantly slower than the other models in terms of prediction time, which weakens their suitability for real-time classification. In comparison, the MLP and SVM models predicted packets at a much faster rate, which is certainly more appropriate for use in real-time.

However, we observed that by reducing the proportion of the payload used as model input to just 64 bytes, the prediction speed of the deep 2D-CNN model can be improved to 14581.5 packets per second, whilst maintaining an accuracy of 79.2%. This exceeds the 10000 packets per second benchmark for real-time classification on a 10mbps network, and still offers superior out-of-sample performance relative to the other models considered. This then provides an answer to our third research question, demonstrating that by reducing the input size, the prediction speed of 2D-CNNs can be significantly improved, without the cost to its accuracy detracting from its accuracy advantage over the baseline models. As a result, we would recommend this 2D-CNN approach as the most suitable for use in real-time in community networks.