LM Entailment

A Proof of Concept of the Implementation of LM Entailment

LM Entailment is an extension of the Rational Closure Construction and Algorithm suggested for reasoning in the KLM-Approach. It extends the Rational Closure Algorithm from dealing with Conditional Knowledge Bases to Typical Knowledge Bases in PTL. With this extension, the consequence operator can no longer satisfy all ten postulates postulates put forward for it to be a rational conditional.

The postulate LM Entailment focuses on satisfying is the Single Model Postulate. The LM Preference Relation ($⊴_{LM}$) is what the method uses to ensure that a Single Minimal Model is produced. The Single Minimal Model is referred to LM-Minimal Model. The consequence operator for LM Entailment satisfies all of the postulates except for the Strict Entailment Postulate (P8). It is important to note that it does satisfy Conditional Strict Entailment and Classical Entailment.

With focusing on satisfying the Single Model Postulate, LM Entailment does lose the presumption of typicality and some conclusions that may want to be made, may not be able to made. LM Entailment is much less computationally expensive because of this. The choice between PT Entailment and LM Entailment should be made dependent on the context.

The LM Algorithm

The algorithm implemented to produce the LM-Minimal Model is shown below. This algorithm is based on what was put forward in previous literature.

- Step 1: Start with an initial Ranked Model where all the interpretations are on the first level: $L_0=\mathcal{U}$

- Step 2: Separate the interpretations which do not contradict the knowledge base with regards to the current ranked model from those that do. The set that does contradict is defined as C. This is shown as $C_{i}\mathrel{\mathop:}= [[\mathcal{K}]]^{\mathcal{R}_i}$

- Step 3: If the set of interpretations being moved is equivalent to the current level, return the current Ranked Model with the current level set to the infinite level: If $C_{i} = L_i$, return $\mathcal{R}_i$

- Step 4: If not move all the interpretations in $C_i$ up a level ($L_{i+1}=C_i$) in the Ranked Model (creating a new Ranked Model) and go to Step 2.

The LM Implementation

The algorithm and entailment check was coded in Python. The PyMiniSolvers repository was used as an interface for MiniSAT. The implementation was divided into a conversion of an input knowledge base into a format compatible with the MiniSAT and then an entailment check. The following operators were used and represented in input format.

- $\lnot: -$

- $\lor: |$

- ⋀ : &

- $\rightarrow:$ ›

- $\bullet: *$

The conversion required conversion of the sentences to Conjunctive Normal Form. This means the sentences can only contain and, or, negation or typicality operators. Which means that all of the implication operators need to be replaced. Once that is completed, the negations were propagated to the innermost brackets. This was done recursively. Once this is completed, the or operators were propagated to the innermost brackets. This was also done recursively. Once this is done, the sentences can be converted to a format compatible with the SAT Solver.

What happens in the entailment check is dependent on what type of sentence is being checked. If the sentence is a classical sentence without typicality, entailment is checked in the same way it would be in Classical Propositional Logic with the typicality operator being ignored. If the sentence has typicality operator within it, the algorithm looks for what the typicality operator is applying to and does a classical entailment check with the sentence and the interpretations on the level for what the typicality operator is appling to is typical only.

Results

Tweety Example:

The Knowledge Base for the Tweety Example in PTL is as follows: $\mathcal{K}=$["$p\rightarrow b$", "$\bullet b \rightarrow f$", "$\bullet p \rightarrow \lnot f$"]. Where p, b and f stand for Penguins, birds and flying respectively.

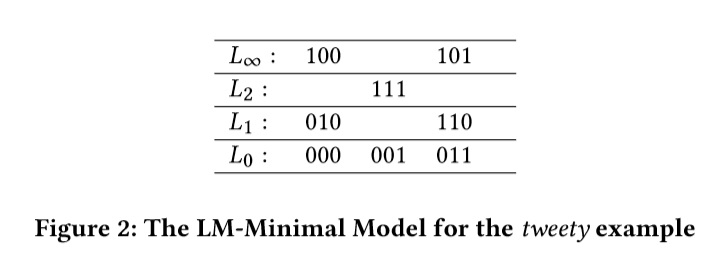

The LM-Minimal model produced is shown below. The first value represents the boolean value for penguin. The second value represents the boolean value for bird and the third value represents the boolean value for flying.

From this Ranked Model, the implementation was able to entail that $p\rightarrow \lnot \bullet b$ or that penguins are not typical birds. This corresponds to the intuition of the problem.

Flying Fish Example:

The Knowledge Base for the Flying Fish Example in PTL is as follows: $\mathcal{K}=$["$\bullet b\rightarrow f$", "$e \rightarrow \bullet f$", "$e \rightarrow \lnot b$", "$\bullet f \rightarrow w$"]. Where b, f, e and w stand for birds, flying, flying fish (Exocoetidae is the scientific name) and wings respectively.

The LM-Minimal model produced is shown below. The first value represents the boolean value for bird. The second value represents the boolean value for flying. The third value represents the boolean value for flying fish and the fourth value represents the boolean value for wings.

From this model, if you try to entail if flying fish have wings, it produces false as the answer which on the face of if would make sense, as the knowledge base says that Flying Fish are not typical flying things and typical flying things have wings. In this case it is not inferring whether or not Flying Fish have wings as it does not mention what non-typical flying things may have.