Interactive Question Answering

Policy-based & Environment Dynamics Model

Edan Toledo

Overview

This study explored the effectiveness of a policy-based method within textual environments and the use of a predictive environment dynamics model in the creation of generalisable and language comprehending agents. Prior work has shown policy-based methods to have significantly better generalisation capabilities than their value-based counterparts. Additionally, the use of a predictive environment dynamics model as proposed by Pathak et al. (2017) has been seen to further aid generalisation. Originally used to provide intrinsic motivation for agents with the goal of efficient exploration, Yao et al. (2021) adapted this approach for text-based games to regularise the textual encoding and capture language semantics showing an improvement in single-game performance.

Three critical research problems were identified:

- Are policy-based methods more suitable for text-based environments due to a seemingly more human-like, non-deterministic, manner of decision making?

- Do policy-based methods generalise better in text-based environments?

- Does using a predictive environment dynamics model as a regularisation technique improve text-based environment generalisation?

We found that a policy-based method, trained using the REINFORCE with baseline algorithm, attained significantly higher results in the QAit benchmark. Additionally, it was seen that the use of a predictive environment dynamics model is a viable regularisation technique, for text-based reinforcement learning, given that access to diverse data is not available.

Research Objectives

The main research objectives were to experimentally investigate and answer the following research questions:- How much impact does the policy-based method have on training and testing accuracy?

- How much faster/slower is the policy-based method's training process?

- How much data does the policy-based method require to reach the same level of performance seen in baselines?

- Does the environment dynamics model improve the baseline model's performances?

- Does the environment dynamics model along with the policy-based method increase performance further?

The QAit Task

Please read about the QAit task before continuing in order to understand the experiment settings, results and their metrics and overall context to the study.

Learn moreArchitecture

Highlevel overview

QA-DQN Encoder and Question Answerer

Due to the focus of this study being on an investigation into an alternative to value-based methods, the agent still makes use of the RL baseline's architecture for textual encoding and question answering. The transformer-based text encoder is responsible for getting vector representations of the environment description and the question at each time step, while the aggregator combines them into a single representation. The final output of the transformer blocks is an encoded sequence that is used as input for the agent's policy and baseline functions as well as the question answerer. At each game step, the current game observation and question are processed and merged together to produce the final state representation that is used by the agent. This gives the agent context of the question at each time step so the agent cannot forget the goal.

Architecture of QADQN Encoder and Question Answerer

Policy Architecture

The new agent makes use of the existing text encoding architecture to learn a policy directly as well as the state-value function of the environment. The policy is a parameterised neural network responsible for all interactive decisions. This means it needs to receive an observation from the environment and output the text command it deems best to do in that situation in order to maximise reward, i.e. answer the question. The policy consists of a shared linear layer and three separate output layers. The shared linear layer takes the output of the text encoder and outputs a fixed-size representation. Each consecutive output layer is conditioned on the previous output along with the shared linear layer. We did this in an attempt to create more legible commands since consecutive words should be aware of their priors. Each output layer represents the policy for each word in the command triplet (Action, Modifier, Object). The policy layers give a probability distribution over the vocab to represent the probabilities with which a word should be selected. Each word in the command triplet is then sampled from the outputted probability distributions to form the command at each game step. This more closely emulates how a human would speak compared to value-based methods, which act greedily and are deterministic. The baseline aims to approximate the state-value function to aid in the training of the policy. The baseline network also makes use of the policy's shared linear layer as input to produce the state value V(s). This is done to regularise the shared linear layer to a common representation to ideally aid the policy and value function in generalisation. The REINFORCE with baseline algorithm is what is used to update model weights. REINFORCE uses Monte-Carlo episodic sample returns to update the baseline and calculate the advantage factor with which to update the policy. Beyond the classic REINFORCE with baseline implementation, each policy's entropy (for each word in the command triplet) is used in the loss function. This is to incentivise more stochastic policies to be learnt whilst maximising reward. The reason for this is to promote exploration and generalisation.

Architecture of Policy and Baseline

Environment Dynamics Model

The learned encoder representations of textual state observations are only optimised for the policy and baseline loss functions. This can lead to severely overfitted and specific representations that are not useful for any environments beyond the training games. This learned representation can be completely absent of any language semantics, which is seen as important for an agent's ability to apply its knowledge to new environments. In text games, a semantic rich representation is proposed as a way to increase generalisation performance as well as prevent overfitting. Yao et al. (2021) show that an inverse dynamics model can be used to regularise Q values, promote encoding of action-relevant observations, and provide a form of intrinsic motivation for exploration. A further evaluation of the transfer learning capabilities of semantic rich representations show benefits for generalisation.

Architecture of Environment Dynamics Model

In this study, the environment dynamics model is used to improve generalisation capability and illustrate its effectiveness as a regularisation technique. Inspired by Pathak et al. (2017), the environment dynamics model consists of a forward and inverse dynamics model. The forward dynamics model takes in a state representation and an action to predict the next state representation. This attempts to model an agent's predictive understanding of the world. The inverse dynamics model uses two consecutive state representations and attempts to predict the action that caused the state transition. This attempts to model an understanding of world dynamics. Since each command can be seen as a triplet of (Action, Modifier, Object), the inverse model has three separate neural decoders that attempt to predict each word in the triplet. Upon initial experimentation, we proceed only to make use of the inverse model and loss, as seen in Yao et al. 2021, to regularise the transformers state representation. Differing from Yao et al., we do not make use of the inverse loss as an intrinsic reward as it seemed to inhibit training strongly. We hypothesise that this occurs by creating too much noise in the reward function due to different environments containing slightly altered dynamics, ultimately preventing the loss from decreasing sufficiently. Furthermore, we do believe that there is a benefit to the use of the forward model, but this requires further experimentation.

Experiments

The primary experiments were:- REINFORCE with Baseline

- REINFORCE with Baseline using Environment Dynamics Model

- DQN using Environment Dynamics Model

- Fixed Map with 1 game

- Random/Fixed Map with 500 games

Each experiment used a single GPU and took approximately 3 - 8 days, depending on the question type and experiment. For single-game experiments, policy-based agents were trained for one hundred thousand episodes, and DQN with semantic regularisation were trained for 200 000 episodes. This is because the policy-based methods converge much earlier. For the 500 game experiments, all agents were trained for 200 000 episodes.

Results

Selected results are presented. For a full set of results and discussion, please refer to the paper. Question Answering accuracies are shown first, with the Sufficient Information scores in brackets. Highlighted results indicate better testing QA results compared to the other agent.

Fixed Map

Trained on 1 Game

| Model | Location | Existence | Attribute | |||

| Train | Test | Train | Test | Train | Test | |

| Random | - | 0.027 | - | 0.497 | - | 0.496 |

| DQN | 0.972 (0.972) | 0.122 (0.160) | 1.000 (0.881) | 0.628 (0.124) | 1.000 (0.049) | 0.500 (0.035) |

| DDQN | 0.960 (0.960) | 0.156 (0.178) | 1.000 (0.647) | 0.624 (0.148) | 1.000 (0.023) | 0.498 (0.033) |

| Rainbow | 0.562 (0.562) | 0.164 (0.178) | 1.000 (0.187) | 0.616 (0.083) | 1.000 (0.049) | 0.516 (0.039) |

| DQN w/ Semantics | 0.962 (0.962) | 0.152 (0.158) | 1.000 (0.876) | 0.624 (0.122) | 0.998 (0.203) | 0.490 (0.063) |

| Policy-based | 1.000 (1.000) | 0.168 (0.172) | 1.000 (0.933) | 0.584 (0.217) | 1.000 (0.216) | 0.514 (0.060) |

| Policy-based w/ Semantics | 1.000 (1.000) | 0.182 (0.184) | 1.000 (0.932) | 0.630 (0.228) | 0.948 (0.197) | 0.494 (0.068) |

When training on one game, the policy-based agent overfits more than the baselines by achieving 100% accuracy on all three question types along with high sufficient information scores - we hypothesise that this is due to the policy-based agent making more efficient use of data, thereby in the same training period overfitting to a greater extent. Despite this, we can see that the testing performance of the policy-based method is still arguably better than the baseline methods by showing similar question accuracy performances but a significant increase in sufficient information scores. This indicates the policy itself is performing better, with respect to navigation and interaction, but the overfitted QA model inhibits performance when trying to answer questions.

Using the environment dynamics model to promote semantic encoding and prevent overfitting appears to be successful. When training on a single game, the use of the environment dynamics model helped the policy-based agent achieve the highest results on the test-set even though the training performance was the same.

Trained on 500 Games

| Model | Location | Existence | Attribute | |||

| Train | Test | Train | Test | Train | Test | |

| Random | - | 0.027 | - | 0.497 | - | 0.496 |

| DQN | 0.430 (0.430) | 0.224 (0.244) | 0.742 (0.136) | 0.674 (0.279) | 0.700 (0.015) | 0.534 (0.014) |

| DDQN | 0.406 (0.406) | 0.218 (0.228) | 0.734 (0.173) | 0.626 (0.213) | 0.714 (0.021) | 0.508 (0.026) |

| Rainbow | 0.358 (0.358) | 0.190 (0.196) | 0.768 (0.187) | 0.656 (0.207) | 0.736 (0.032) | 0.496 (0.029) |

| Policy-based | 0.990 (0.990) | 0.948 (0.958) | 0.964 (0.916) | 0.948 (0.892) | 0.748 (0.048) | 0.466 (0.045) |

| Policy-based w/ Semantics | 0.886 (0.888) | 0.748 (0.768) | 0.958 (0.917) | 0.932 (0.872) | 0.580 (0.043) | 0.506 (0.044) |

The 500 games experiment shows a better indication of the performance increase compared to the baselines by achieving significantly higher results. For location type questions in the fixed map setting, the policy-based agent achieves an accuracy of 94.8% on the test-set. This is a large improvement over QAit's state-of-the-art result of 22.4%. We see similar scale improvements in existence type questions for the fixed map setting where the policy-based model achieved an accuracy of 94.8%. This is compared to QAit's 67.4% accuracy. This comparison doesn't capture the true performance increase, which can be seen by looking at sufficient information score where we see a dramatic improvement in navigation and interaction. The policy-based method scores 0.892 compared to QAit's best score of 0.279. Interestingly, in the fixed map setting, the results of the attribute type questions show worse QA accuracy but greater sufficient information score. As one can see for all attribute type questions, neither the policy-based model nor the baselines achieve results much higher than the random baseline in terms of QA accuracy. By looking at the sufficient information score, we can see that neither model truly ends up in the states they should be in therefore these results are most likely due to chance.

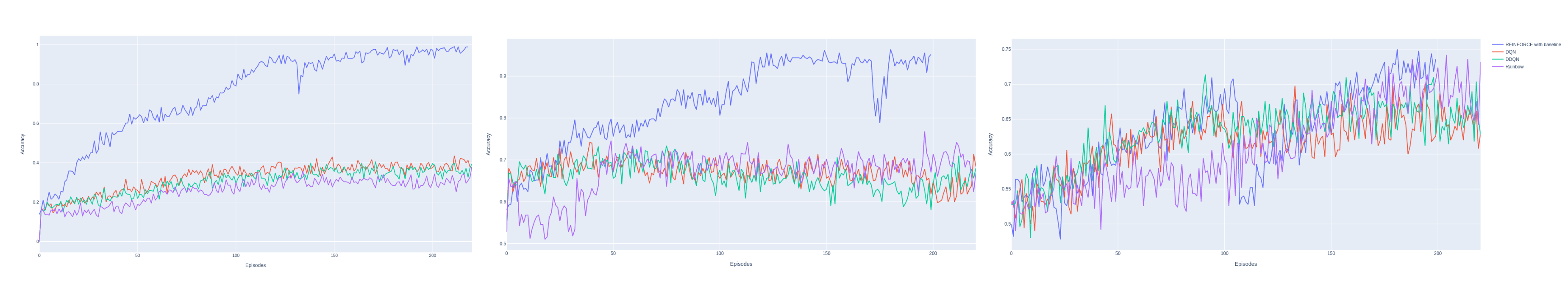

500 Games Training Curve

From the training curves, we see that the policy-based method achieves much higher performance using much less data than all the baseline methods, indicating a higher sample efficiency in this domain. After 30 000 episodes of training in the 500 games setting, the REINFORCE agent achieves results on par with the baselines. This is significant as the baselines were trained for over 200 000 episodes - indicating that the REINFORCE agent has a greatly improved sample efficiency. Furthermore, the gradient of improvement is extremely steep. This is mirrored in the single-game setting where the REINFORCE agent achieves the baselines' results in less than 15 000 episodes. This is a notable result since, in general, off-policy methods, such as the QAit baselines, are more sample efficient than on-policy methods, e.g. REINFORCE. Additionally, the REINFORCE agent only updates weights once per episode, whereas the baselines update their weights every 20 steps, which can potentially be twice or three times an episode. This indicates that learning a policy in the QAit environment is an easier task than approximating the Q-function. The use of the environment dynamics model seems to lower sample efficiency for both the DQN and policy-based methods. This is believed to be due to the extra difficulty of learning game semantics thereby requiring more data points.

The improvement in sample efficiency indicates that policy-based methods are more suited to text-based environments. This increase also alleviates the computational resources required at scale, thereby aiding the feasibility of future research since less training data is needed.

Conclusions and Future Work

This research presented the advantages of using a policy-based method in textual environments, specifically the QAit task, as well as the potential for a new regularisation technique to aid in textual generalisation. More specifically, we investigated the differences in training, sample efficiency and evaluation performances of the REINFORCE with baseline algorithm compared to three popular value-based reinforcement learning baselines: DQN, DDQN and Rainbow. We further investigated the use of a semantic regularisation technique to improve the baseline and policy methods performance. The results produced strongly suggest that policy-based reinforcement learning methods are not only more suited for textual domains due to their training sample efficiency and performance, but they also possess generalisation capabilities beyond their value-based counterparts. Secondly, the results also show that the use of an environment dynamics model for encoding semantics is a viable regularisation technique when access to more than one training game is not possible.

A large problem with the traditional Intrinsic Curiosity Module (ICM = Environment Dynamics Model) approach is that it was not designed for multiple or procedural training environments. An issue that can occur is an agent never learns the outcome of a state-action pair due to environments having different world structures, thereby always providing intrinsic reward - this defeats the purpose of the ICM by not allowing an agent to learn the dynamics of the world correctly. A new way of providing intrinsic motivation in procedural environments would provide great benefit to the IQA task in text-based environments as rewards are sparse and exploration can be hard, as seen in attribute type questions. Furthermore, the use of the forward dynamics model in the process of semantic regularisation should be investigated.