Knowledge representation and reasoning constitute a domain within the realm of Artificial Intelligence. It involves the modelling of information through formal logical frameworks, enabling the application of rule-based manipulations that pertain to specific modes of reasoning.

Within the diverse array of logical systems, varying levels of expressive power emerge. Classical logic, for instance, lacks the capacity to encapsulate the concept of typicality, posing a challenge when endeavouring to succinctly represent exceptional knowledge. This limitation becomes evident when contemplating the subsequent illustrative scenario.

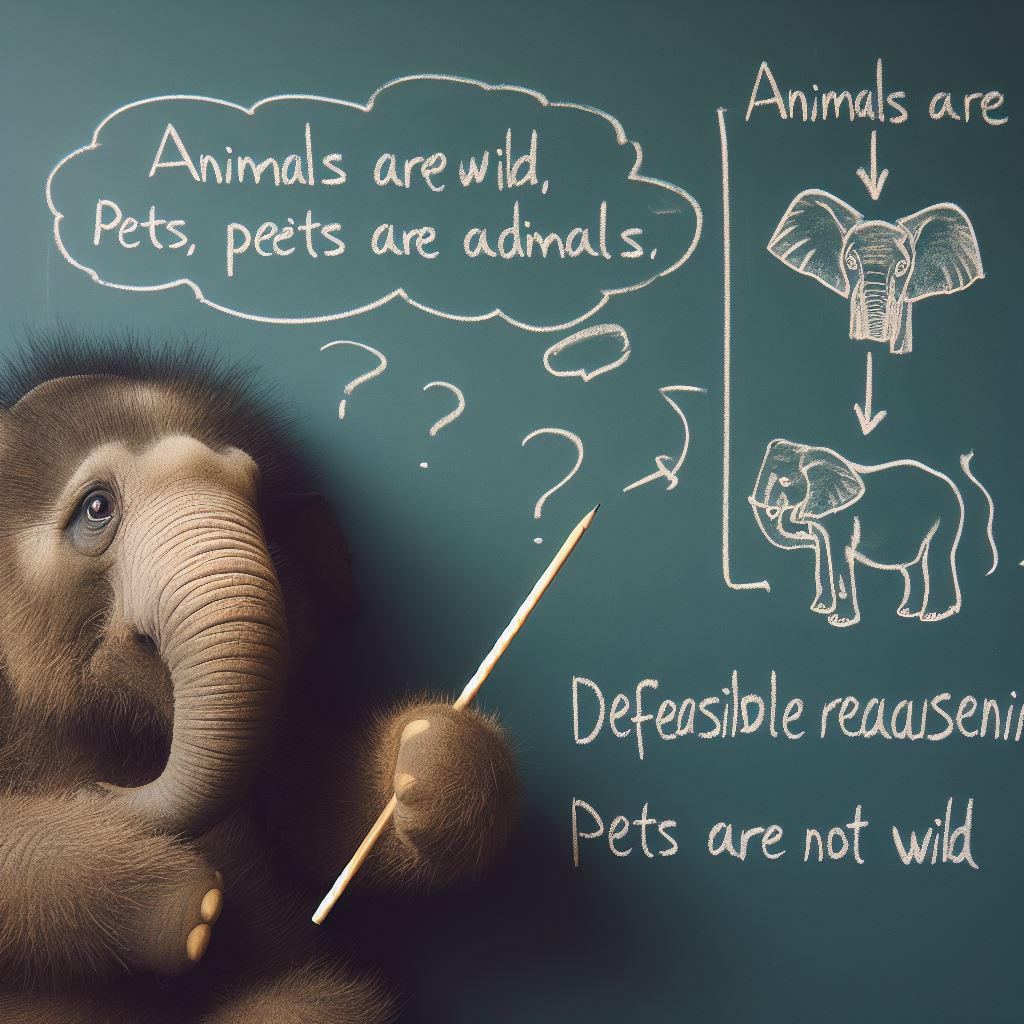

To demonstrate this understanding, let us consider the following example. Assume we are given the observable facts:

- Animals are Wild

- Animals can Hunt

- Pets are Animals

Based on these assertions, we arrive at the deductions that pets are wild and pets can hunt. However, a paradox emerges if we introduce an additional statement,

- Pets are not Wild

indicating that despite being able to hunt, pets are a unique category of animals that are not wild. This leads to the contradictory inference that pets are both wild and not simultaneously. As a result, our capacity to engage in logical reasoning concerning pets becomes compromised, rendering their existence untenable.

Despite this new conflicting information, we can not withdraw our previous inference that pets are wild due to the monotonicity property of classical reasoning. The only logical inference we can draw based on this new information is that pets do not exit,

To account pets being a non-wild class of animals, we need a different form of reasoning. While we could modify the knowledge base to address pets as an exception, this is not practical as other exceptions would also require changes to the knowledge base structure. Defeasible reasoning is non-monotonic and is meant to handle such exceptions.

Project Objectives

The primary aims of our research for the project include:

- To explore and analyse the relevant theory and algorithms underlying classical reasoning and motivation for explanation services, especifically justifications.

- To explore and analyse the relevant theory and algorithms for KLM-style defeasible entailment and justification so as to present explanations in a user-friendly manner.

- To develop a software system tool with simple interfaces that implements the discussed algorithms so that it can be used as a debugging service for complex knowledge bases.

- To document and explain the inner workings of the developed software system tool so that other interested researchers could improve on it and/or modify it for other defeasible reasoning algorithms or usage scenarios.