Explanations In Logic-Based Reasoning Systems

BY SOLOMON MALESA AND CILLIERS PRETORIUS

ABOUT

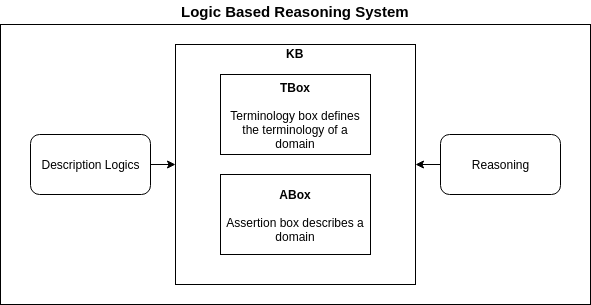

A logic-based reasoning system is a software system that generates conclusions that are consistent with a knowledge base (KB).

This KB consists of a set of propositions that are interpreted to be

true.

There is an increasing trend of adopting logic-based reasoning systems as primary advisers in industries such as medicine, e-commerce, and finance to perform medical diagnosis, product recommendations, and stock market decisions respectively. Although the rate of adoption of logic-based reasoning systems has risen, the acceptance of the conclusions generated by logic-based reasoning systems is not guaranteed because the steps taken to generate conclusions are usually hidden from the user.

Characteristics of Explanations

In order to evaluate the quality of explanations, we must define benchmarks which form a criteria that defines what makes an explanation a good explanation.

- Fidelity is a measure of how well the explanation represents the underlying actions of the Logic-Based Reasoning System.

- Understandability is a measure of how well the user understands the presented explanation.

- Sufficiency focuses on whether the KB of the system has the required information needed to generate an explanation.

- Construction Overhead is a measure of the computational difficulty of generating explanations.

- Efficiency focuses on the impact of generating an explanation on the run-time of reasoning systems.

Challenges in Current Explanations

The scarcity of explanations in reasoning systems suggests that the area concerned with generating explanations or augmenting reasoning systems with explanation facilities is either relatively new or is riddled with challenges.

- Narrowness. Explanation systems seem to allow a limited variety of queries.

- Inflexible. The presentation of the explanations is limited to text format and does not cater for other formats such as graphical forms.

- Insensitive. Many explanation facilities cannot be tailored to users’ unique requirements. Many explanation facilities are not able to elaborate their explanations or answer follow up questions.

- Inextensible. Users cannot modify or extend many existing explanation facilities.

- Unresponsive. Many explanation facilities are not able to elaborate their explanations or answer follow up questions.

OBJECTIVES

This section outlines the objectives of our project. The project is composed a theoretical component and a practical component.

Theoretical Objectives

The scope of the theoretical component is focused on generating justification explanations expressed in Attributive Language with Class Complements (ALC) Description Logics (DL); into Natural Language explanations.

- Introduce ALC DL.

- Show how to generate single and all possible justifications.

- Show how to generate proof-trees.

- Show how to translate ALC DL propositions to Englilsh propositions.

- Describe how to present proof-trees as explanations

Practical Objectives

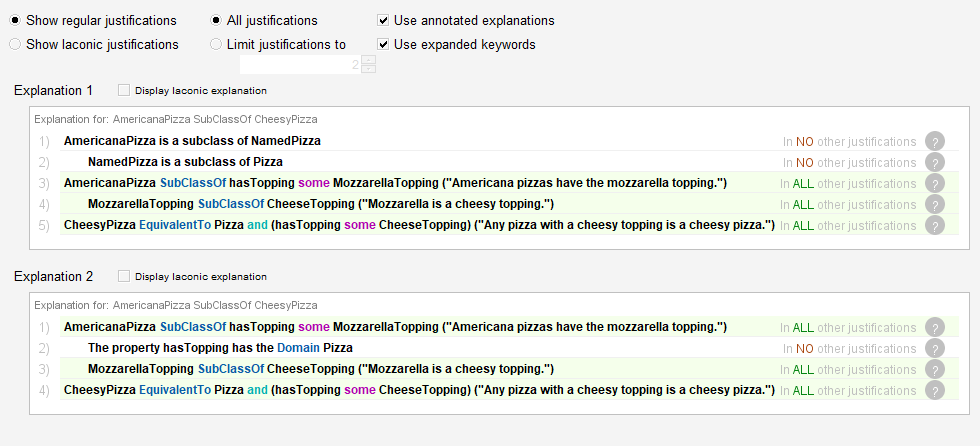

The Protégé OWL Ontology Editor is a tool to develop, debug, and reason with ontologies. It provides explanations through a tool developed by Matthew Horridge, but the explanations are difficult to understand for users who are not familiar with description logics or ontologies in general.

With the overarching goal of improved readability and more effective explanations, two methods are considered. One method is to allow the creator of an ontology to define an explanation for an axiom, which would be displayed as the explanation for that particular axiom. The second method is to expand the keywords that are used in the axiom to use more natural language.

THEORY

The theoretical component of this project develops a theoretical framework for generating explanations as shown in the following figure.

IMPLEMENTATION

For the practical part of the project, the Protégé OWL Ontology Editor's Explanation Workbench plugin was extended to provide more readable and effective explanations. This was done by allowing ontology creators to define an explanation for a specific axiom, as well as expanding the keywords from the Manchester Syntax (as used by Protégé) to use more natural language.

CONCLUSION

We defined and provided a framework for generating explanations for entailments in ALC DL ontology. There are limitations to this framework, including unoptimised algorithms as presented here. Converting explanations to English is tricky given English's complexity and ambiguity. The framework does not assess the user understanding of the explanation. However, we curate the state of the art in explanation generation and provide a thorough summary thereof.

From the practical side, we provide a framework for ontology creators to define detailed explanations for particular axioms. We also increase the understandability and effectiveness of the default explanations by expanding the Manchester Syntax keywords to use more natural language. This serves as a good baseline for future work in improving explanations.

Screenshot of a Protégé explanation in its final format.

Screenshot of a Protégé explanation in its final format.