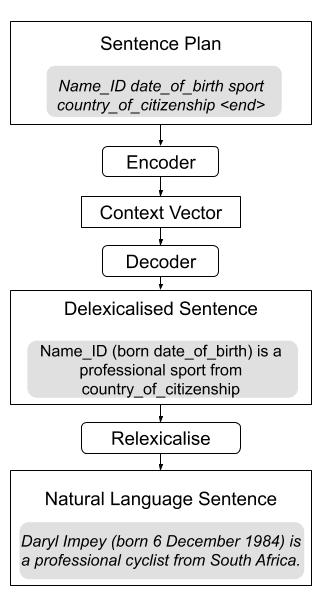

Sentence Planner

The sentence planner produces an ordered sequence of slot-types, the sentence plan,

from the collection of input tokens given to the sentence planner. The aim is to order

all the tokens so that the linguistic realiser is able to produce a coherent

utterance.

Training

The sentence planner works in a similar way to a Markov Decision Process (MDP) it l

earns the probabilities for the next slot-type given the current slot-type or pair of

slot-types. These probabilities are learnt using the tokens extracted from the

delexlicalised reference texts.

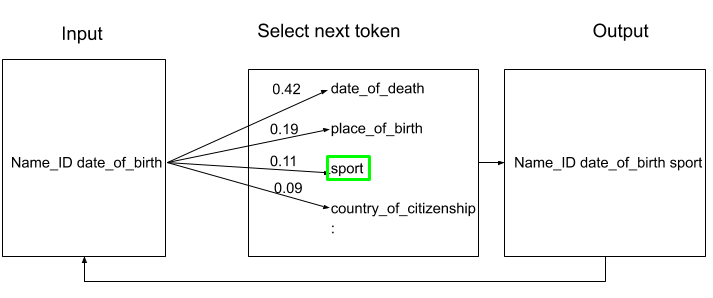

Generation

To generate a sentence plan the most likely slot-type to start an utterance is found.

This slot-type is then added to the sentence plan. The sentence plan is then generated

iteratively by adding the next slot-type that is the most likely slot-type to follow

the two most recently added slot-types. The sentence planner maintains an array of

slot-types from the input MR that must still be included in the sentence plan the

remaining array. A slot-type is only added to the sentence plan if it is in the

remaining array. With the exception of the Name_ID and <end> slot-types. Once the

remaining array is empty the sentence plan is complete. This process is shown in the

figure below for the following input and remaining arrays:

Input = [Name_ID, date_of_birth, country of citizenship, sport]

Remaining = [Name_ID, date_of_birth, country of citizenship, sport, <end>]